Amidst the many wonders of science, artificial intelligence is a true ovation of machine-exhibited intelligence. A derivative of AI, machine learning is an intriguing application of AI principles that has changed the face of technology. Machine learning (ML) is crucial in most technical and non-technical processes. From enhancing applications in today’s digital era to lending a hand in robotic interventions, ML is first of its kind. For Android app development, one of the most popular mobile app development platforms, integrating ML offers numerous benefits to the businesses and their products. Our topic under spotlight today is the impact of machine learning in Android native devices. This guide explores on-device machine learning for Android app development services.

Let’s go over some of the advantages and implementation strategies.

Why Machine Learning?

To understand the significance of Machine Learning in android native, we must understand its features first. This will enlighten us on the benefits of the features that can contribute positively to the Android apps. The prominent features are:

- Object, gesture, and face recognition

- Speech recognition and natural language processing

- Recommendation and fraud detection systems

- Spam detection and personalization

ML can also boost existing app functionalities such as search, recommendations, translation, image classification, and fraud detection.

On-Device Machine Learning

On-device machine learning represents a shift from traditional cloud-based ML:

-

Direct Processing

ML tasks are executed directly on devices like smartphones or tablets.

-

Independence from the Cloud

It functions without constant cloud connectivity.

-

Local Data Processing

Local data processing is a way to ensure quicker response times and immediate inference, where inference is applying a trained ML model to make predictions from input data.

Why On-Device Machine Learning?

On-device machine learning solutions offer several benefits:

-

Low Latency

Faster response times and smoother user experience by processing data directly on the device. This is crucial for real-time applications like gaming and augmented reality.

-

Data Privacy

On-device ML keeps sensitive data on the device and protects it from third-party access or cyber threats. Vital for apps handling personal or confidential information in health, finance, etc.

-

Offline Support

Operates independently of network availability, useful in remote or low-connectivity areas, or for saving data and battery.

-

Cost Saving

Reduces cloud computing and storage costs by minimizing cloud data transfer and processing. Beneficial for apps with high data volumes like video streaming and social media.

On-Device ML Applications

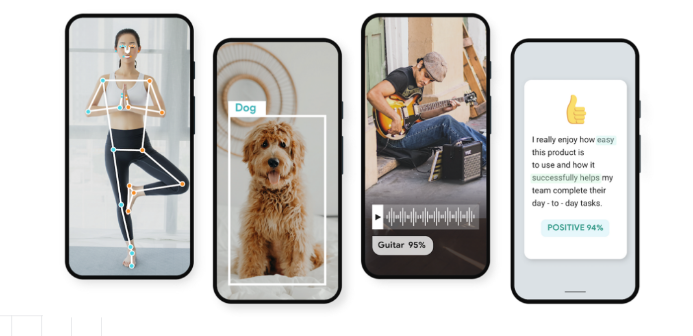

When it comes to on-device applications, ML doesn’t shy away from performing exceedingly well in the following areas:

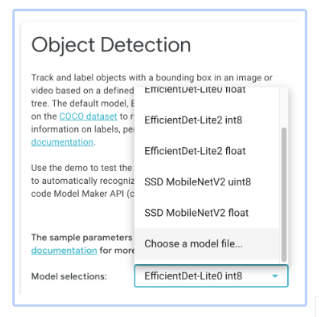

1. Object Detection

- Detects and highlights multiple objects in images or videos.

- Provides bounding box details.

- Classifies objects into predefined categories.

2. Gesture Detection

- Identifies specific finger gestures in real-time.

- Classifies gesture types.

- Offers detailed fingertip point information.

Integrating On-Device Machine Learning

Several tools and frameworks are available for integrating on-device machine learning in Android native apps:

ML Kit

Developed by Google, ML Kit is an SDK offering pre-built ML models and APIs for tasks like barcode scanning, text recognition, face detection, and pose detection. ML Kit supports custom TensorFlow Lite models and AutoML Vision Edge models.

MediaPipe

Another exceptional Google framework, MediaPipe, is known for its customizability. It facilitates building custom ML pipelines with pre-built components such as graphs, calculators, and models.

Moreover, it offers ready-to-use solutions for face mesh detection, hand tracking, and object detection.

TFLite (TensorFlow Lite)

TFLite is a Google library that enables running TensorFlow models on mobile devices efficiently. Some of its key features include low latency, small binary size, hardware acceleration, model optimization, and metadata extraction capabilities.

ML Kit for Android Overview

ML Kit is a versatile SDK that integrates Google’s machine-learning expertise into custom mobile apps.

Key Features of ML Kit

- Wide Compatibility: Designed for both Android and iOS, it reaches a wide user base.

- Ready-to-Use Models: Offers Google’s pre-trained models for various functionalities.

- Support for Custom Models: Accommodates custom TensorFlow Lite models for specific requirements.

- Flexible Processing Options: Enables both on-device processing for quick, offline tasks and cloud-based processing for enhanced accuracy.

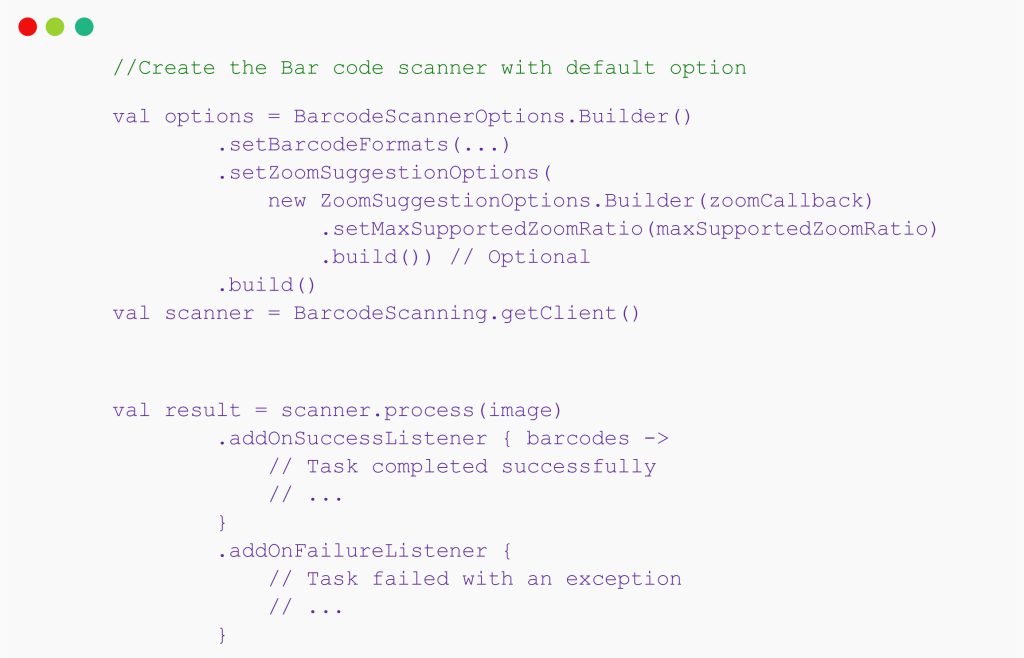

Google Code Scanner Features

- Barcode Reading Without Camera Permission: Scans barcodes using on-device ML, no camera access needed.

- User-Friendly Interface: The interface is managed by Google Play Services.

Here’s how you can integrate it

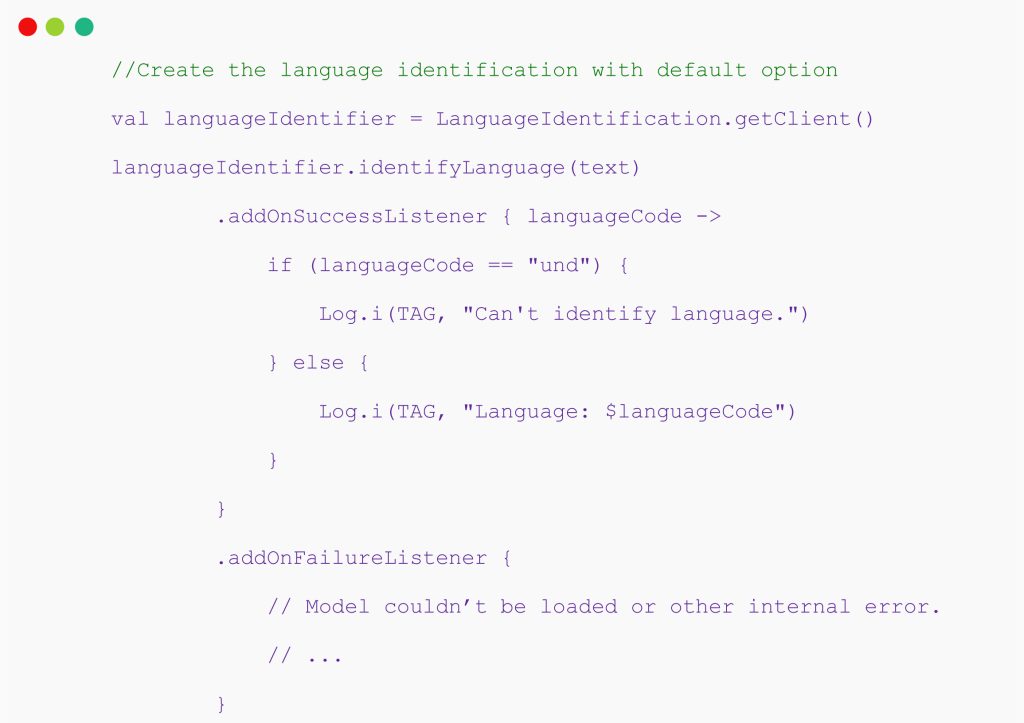

Language Identification Capabilities

- Extensive Language Support: Can identify over a hundred languages.

- Recognizes Romanized Text: Detects text in languages like Arabic, Bulgarian, Greek, Hindi, Japanese, Russian, and Chinese, in both original and Romanized scripts.

Here’s how you can integrate it

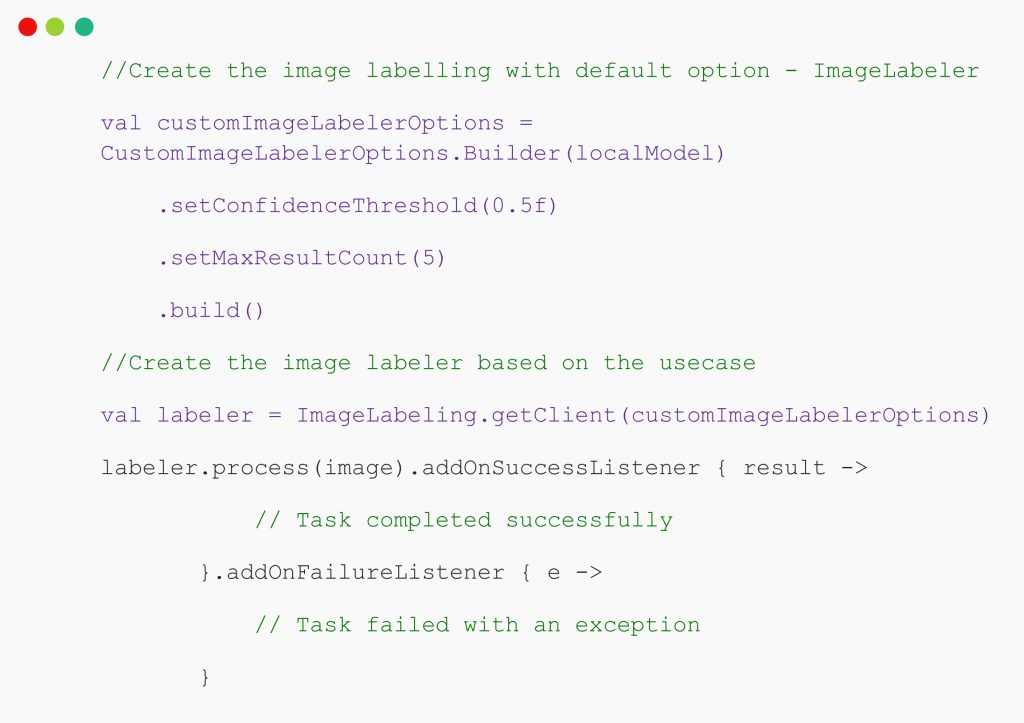

Image Labeling Functions

- General-Purpose Classifier: Recognizes over 400 object categories commonly found in photos.

- Customizable: Compatible with TensorFlow Hub pre-trained models or custom models developed with TensorFlow, AutoML Vision Edge, or TensorFlow Lite Model Maker.

- Simple APIs: High-level APIs handle model inputs/outputs and image processing, providing text descriptions of labels extracted from the TensorFlow Lite model.

Here’s how you can integrate it

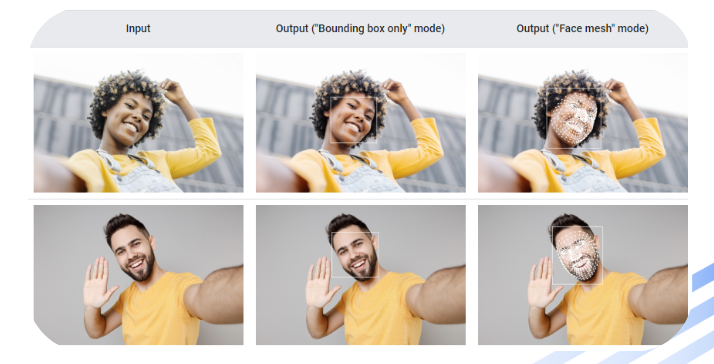

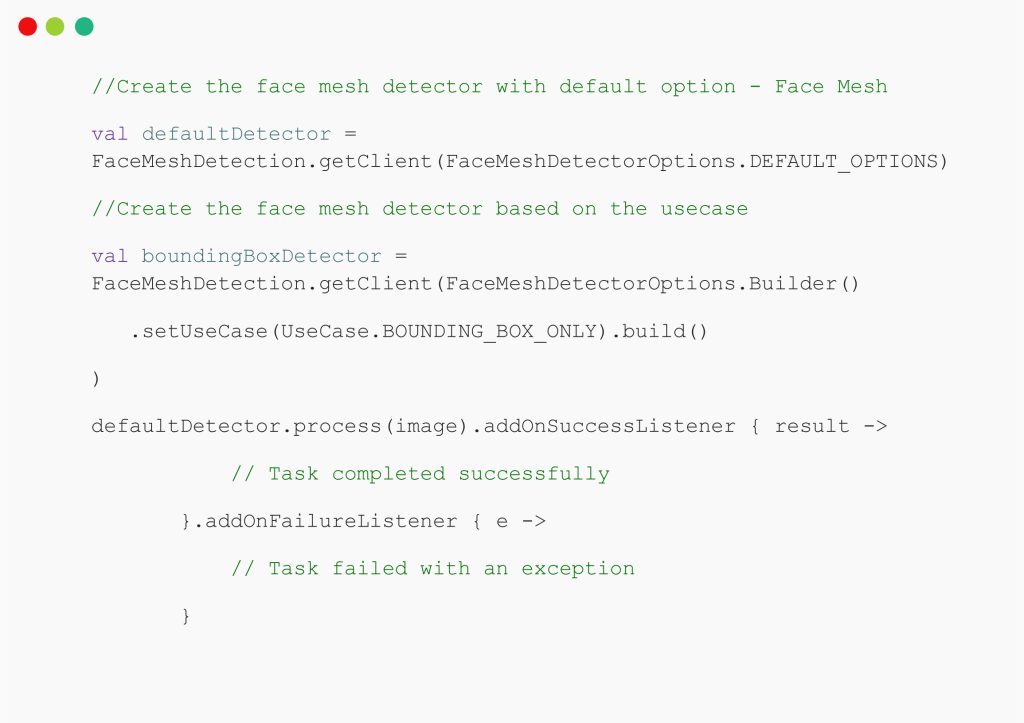

Face Mesh Detection Features

- Face Recognition and Localization: Identifies faces in images, providing bounding box details.

- Detailed Face Mesh Data: Offers 468 3D points and triangle information for each detected face.

- Real-Time Processing: Efficient for real-time applications like video manipulation, processing video frames quickly on-device.

Here’s how you can integrate it

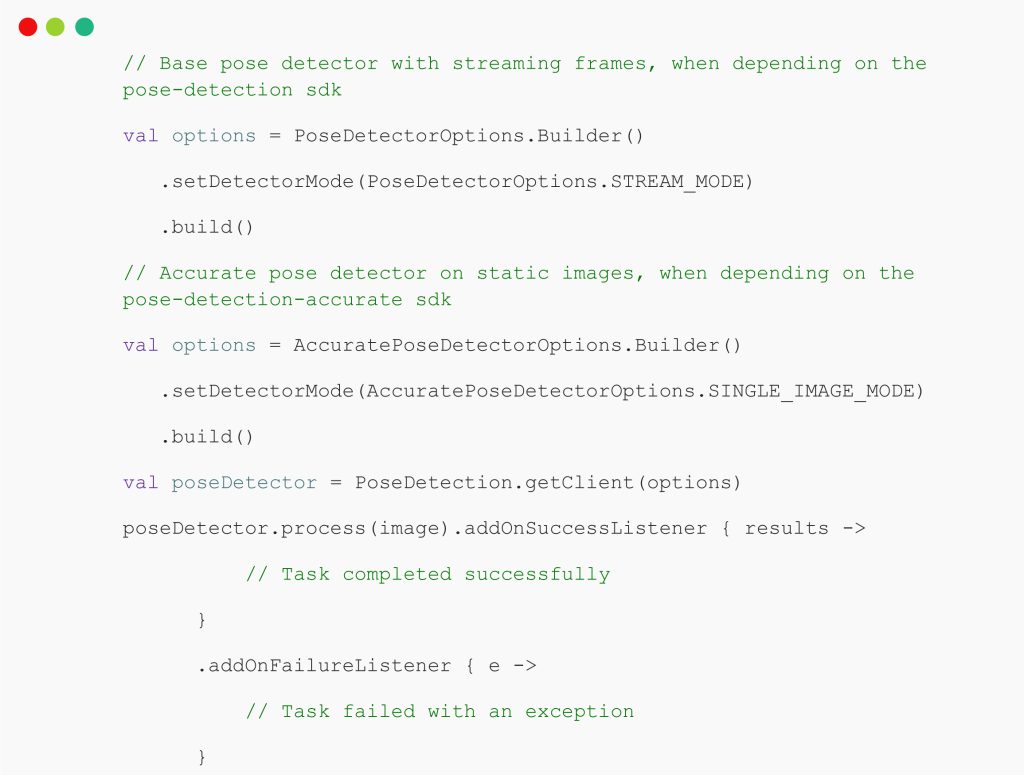

Pose Detection Overview

Pose detection in ML Kit is a feature with various capabilities:

- Cross-Platform Support: It comes with full support for cross-platform app development. It provides a consistent experience on both with machine learning in Android native and iOS devices.

- Full Body Tracking: The model identifies 33 key points on the skeleton, including hands and feet.

- InFrameLikelihood Score: Each landmark point is accompanied by a score (0.0 to 1.0) indicating the likelihood of it being in the image frame, where 1.0 signifies high confidence.

- Two SDK Options:

- Base SDK: Designed for modern smartphones (like Pixel 4 and iPhone X), Base SDK operates in real-time. The framerate is approximately 30 fps on Pixel 4 and 45 fps on iPhone X, with varying precision in landmark coordinates.

- Accurate SDK: This offers more precise landmark coordinates but at a slower framerate.

- Z Coordinate for Depth Analysis: Z coordinate provides depth information, helping to discern if body parts are in front of or behind the user’s hips.

Here’s how you can integrate it

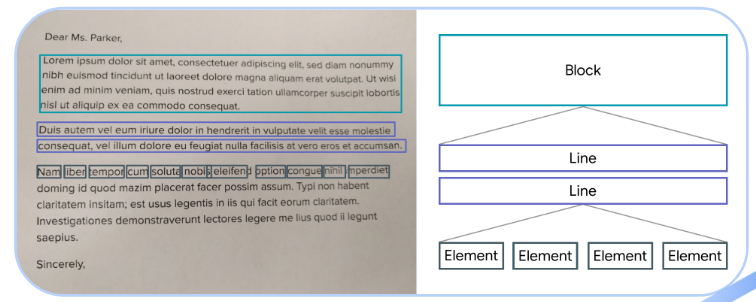

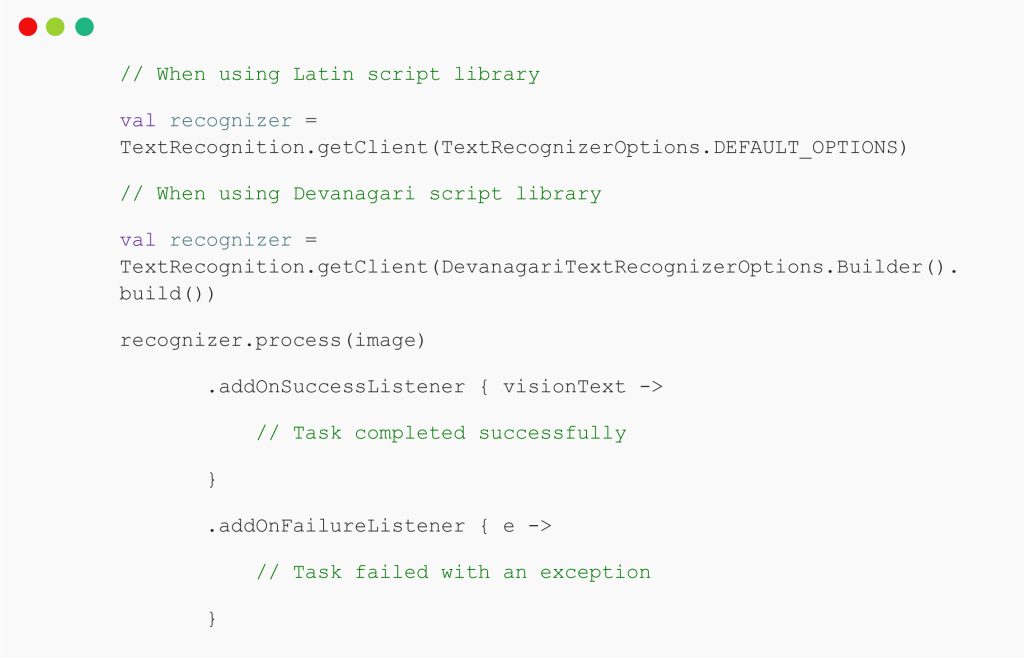

Text Recognition

Text recognition in ML Kit is equipped with several functionalities and support functions such as:

- Multilingual Support: It recognizes text in various scripts and languages, including Chinese, Devanagari, Japanese, Korean, and Latin.

- Structural Analysis: This is capable of detecting symbols, elements, lines, and paragraphs within the text.

- Language Identification: This can easily and effortlessly determine the language of the recognized text.

- Real-Time Recognition: The ML kit is capable of recognizing text in real time across a broad spectrum of devices.

Here’s how you can integrate it

MediaPipe Overview

MediaPipe is a framework designed for constructing cross-platform machine learning pipelines, notable for its:

- Ease of Use: MediaPipe simplifies the process of building ML applications.

- Innovation: It incorporates the latest advancements in applied machine learning.

- Speed: This also ensures fast development and execution of ML pipelines.

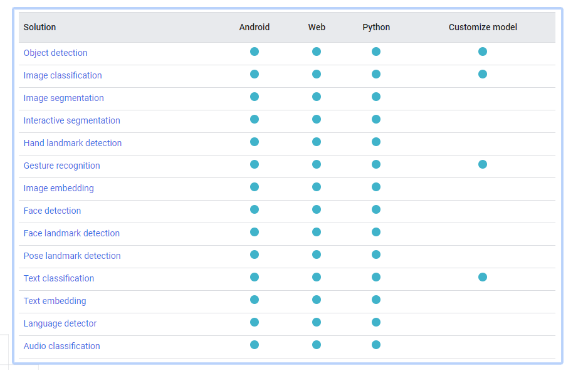

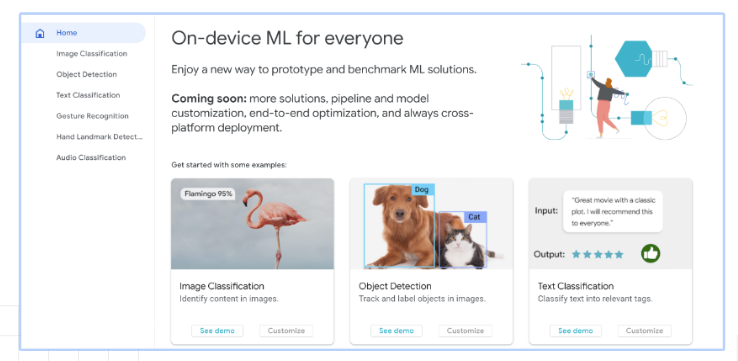

MediaPipe Solutions

Providing ready-to-use solutions, MediaPipe enables developers to quickly assemble on-device ML applications.

Available Solutions

Effortless On-Device ML in Minutes

Here’s how you can rapidly integrate on-device machine learning into an app using ML:

- Identify the ML Requirement: You must pinpoint the specific machine learning feature your app needs. For example, a photo editing app may require object detection or image segmentation.

- Select the Appropriate Tool: First, choose a tool or library that fits your requirements. For gesture recognition, MediaPipe is a great option, while ML Kit is suitable for text recognition.

- Integrate Pre-Built Solutions: In the next step employ the ready-to-use solutions or APIs from your chosen tool. This typically involves adding some code to your app and ensuring it has the necessary permissions.

- Test and Refine: After integration, thoroughly test the machine learning feature. Make sure it performs well in various scenarios. User feedback will happen in this scenario to make amends and improvements.

- Optimize Performance: While quick integration is convenient, optimizing the feature for performance can be helpful and crucial. This may include reducing model size, enhancing speed and accuracy, or ensuring low latency.

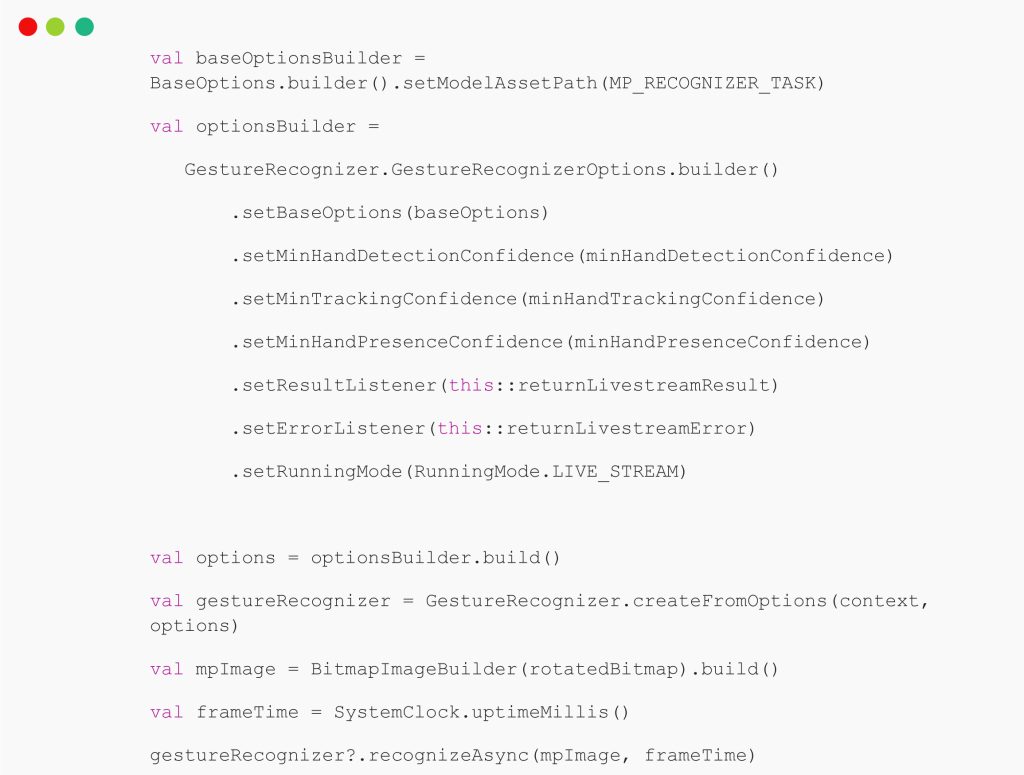

Gesture Recognition Overview

Gesture recognition in ML Kit is designed to be efficient and user-friendly, featuring:

- Real-Time Detection: It identifies hand gestures as they occur.

- Hand Landmarks: This provides detailed landmarks of detected hands.

- Speed: It offers fast processing and response times.

Here’s how you can integrate it

MediaPipe Studio

- Customization: MediaPipe Studio enables developers to tailor models using MediaPipe and set specific input and output criteria.

- MediaPipe Model Maker: It is a tool within MediaPipe Studio that is used for further model customization.

MediaPipe Studio — Custom Models

- Model Input and Output: They define specific requirements for custom models.

- MediaPipe Model Maker Tool: This tool offers additional customization capabilities.

TensorFlow Lite (TFLite) for Android

- Framework: It is a lightweight, open-source deep learning framework by Google, designed for mobile and embedded devices.

- Inference Engine: It is specifically built for Android, ensuring fast model execution.

- Automatic Updates and Reduced Size: This keeps the binary size small and updates automatically.

Why TensorFlow Lite?

- Lightweight: It is optimized for size, suitable for devices with limited storage and processing power.

- Versatile: This supports various ML tasks, from image classification to language processing.

- Cross-Platform: It is capable of working on both Android and iOS.

- Offline Functionality: It enables on-device inference that enable offline connectivity without the internet and apps still operate seamlessly.

Key Features of TensorFlow Lite

- Model Conversion: It can convert TensorFlow models to a mobile-optimized format.

- Pre-Trained Models: This provides ready-to-use models for common ML tasks.

- Customization: TensorFlow Lite supports custom models for specific app needs.

- Neural Network API Integration: It also uses Android’s Neural Network API for faster inference.

Integrating TFLite into Android Apps

Following are the steps to integrate Tensorflow lite in Android applications:

- Model Preparation: Select a pre-trained model or train a custom TensorFlow model, then convert it to TFLite format.

- Integration: In the next step, incorporate the TFLite Android library into your app project.

- Model Deployment: Then place the TFLite model in the app’s assets folder for packaging.

- Inference: You can use the TFLite interpreter to run the model on input data.

Utilizing TensorFlow Lite in Google Play Services

Recent Adoption and Usage

Since September 2022, TensorFlow Lite within Google Play Services has seen significant adoption. It has since crossed the mark of 1 billion monthly users and is being integrated into more than 10,000 apps.

ML Kit APIs Powered by TensorFlow Lite

- TensorFlow Lite in Google Play Services drives several ML Kit APIs, such as Barcode Scanning, Language Identification, and Smart Reply.

- These tools enable developers to leverage machine learning in Android native devices, enhancing user experience and app functionality.

Integrating ML Kit APIs in Android Applications

Following are steps to integrate ML Kit APIs in your Android application:

- Firebase Setup: Begin by creating a Firebase project, as ML Kit is a part of Firebase, and add the required dependencies to your Android app.

- API Selection: Choose an ML Kit API that suits your app’s needs.

- API Implementation: Follow the provided guidelines to integrate the chosen API. For example, in text recognition, process image data through the Text Recognition API and manage the output.

- Optimization and Testing: Ensure the ML feature functions well under different conditions, focusing on performance and accuracy.

Conclusion

Gathering from the above data, it is quite evident that on-device machine learning is a revolutionary trend in the world of mobile app development services. It comes with various tools and functionalities like ML Kit, MediaPipe, and TensorFlow Lite, that equip Android developers to build sophisticated, efficient, and user-centric applications. Machine Learning in android native is for sure a path-breaking technology, that will accelerate the mobile app development process in the years to come. We can anticipate and expect more innovations, fascinating interventions, and streamlined integration processes in the future.

FAQs

Q: How to implement AI in an Android app?

Integrate AI into your Android app using popular frameworks like TensorFlow or ML Kit for tasks like image recognition and natural language processing.

Q: How do you integrate the ML model into a React Native app?

Utilize packages like react-native-tensorflow or react-native-ml-kit to seamlessly integrate machine learning models into your React Native application.

Q: Can I run machine learning on my phone?

Yes, with advancements in on-device processing, running machine learning models directly on your phone is feasible, providing faster and more privacy-conscious AI experiences.

Q: What is Android machine learning?

Android machine learning refers to the integration of machine learning capabilities within the Android operating system, enabling developers to build AI-powered features directly into Android applications.