Given the rapid rise of AI over the last decade, it should not come as a surprise to anyone that humans are actively trying to integrate languages into machines and software through the field of artificial intelligence. They are doing this through a process called Natural Language Processing (NLP) in Artificial Intelligence services.

Not many are aware but NLP methods and NLP techniques date back to the Second World War when Alan Turing came up with the Turing Test. It tests the intelligence of a machine. It serves as the basis for modern Natural Language Processing techniques. We find the applications of Natural Language Processing Techniques in Gamil, when a computer autocompletes your sentence or mail, or when LinkedIn suggests responses to text messages, and the list goes on.

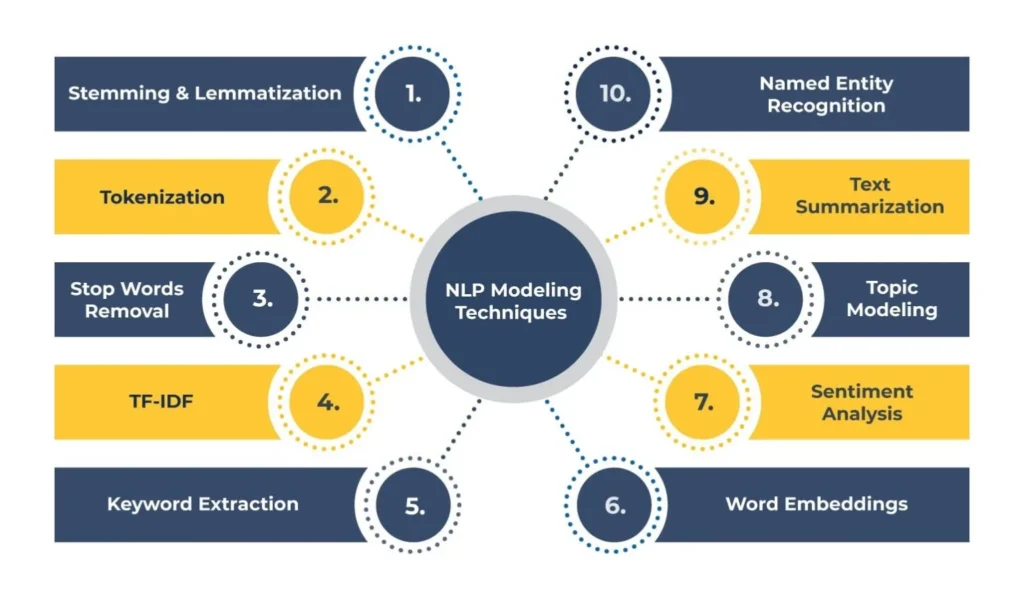

This article explores NLP technology, a branch of artificial intelligence (AI) that allows machines to comprehend human language. It also explains the top 10 NLP techniques every data scientist should know. NLP data science is the real force behind NLP techniques and NLP methods. But, before we dig into Natural Language Processing Techniques, we will first address the basic definition and concept behind NLP techniques and NLP methods. Let’s begin with the top 10 NLP techniques.

What is NLP?

Natural language processing, hereafter referred to as NLP, is the AI-powered process of rendering human language input comprehensible and decipherable to software and machines. Do not confuse this NLP with neuro-linguistic programming, which is a field of psychology. Both are quite different. The topic of discussion here is NLP for data scientists. However many are known to mix Natural Language Processing Techniques with the branch of psychology.

NLP essentially consists of natural language understanding (human to machine), also known as natural language interpretation, and natural language generation (machine to human).

Natural Language Understanding (NLU) – It refers to the techniques that aim to deal with the syntactical structure of a language and derive semantic meaning from it. Examples include Named Entity Recognition, Speech Recognition, and Text Classification. Natural Language Processing techniques find various applications in our lives. You can find many Natural Language Processing techniques in personal and professional realms which we will discuss as we move forward.

Natural Language Generation (NLG) – It takes the results of NLU a step ahead with language generation. Examples of Natural Language Processing techniques include Text Generation, Question Answering, and Speech Generation.

Developers of language modeling in NLP applications like search autosuggest, language translation, and autofill employ all these basic Natural Language Processing techniques to develop. These Natural Language Processing techniques are essential for you if you want to become a top data scientist.

But before we discuss these Natural Language Processing techniques, let’s understand the two main types of NLP algorithms that data scientists typically use.

Types of NLP Algorithms

Rule-Based System

A rule-based NLP system relies on predefined linguistic rules and patterns to process natural language text. These rules are manually crafted by experts and specify how the system should analyze and interpret language. While rule-based systems can be accurate for well-defined tasks, they often struggle with handling ambiguity and require frequent updates to accommodate new language variations.

Machine Learning-Based System

A machine learning-based NLP system leverages algorithms and statistical models to learn patterns and relationships in language from large datasets. It doesn’t rely on explicit rules but rather identifies patterns through training. Such systems excel in tasks like sentiment analysis, language translation, and speech recognition, as they can adapt to varying language usage and improve over time with more data.

How Does NLP Work?

NLP works by employing a combination of techniques from computational linguistics, machine learning, and deep learning to process and analyze natural language data.

At its core, NLP involves several key steps, including tokenization (breaking text into smaller units), part-of-speech tagging (identifying grammatical categories of words), syntactic parsing (analyzing sentence structure), semantic analysis (extracting meaning from text), and finally, applying various algorithms and models to perform specific tasks like sentiment analysis, machine translation, or text generation.

NLP uses this process to enable computers to interact with and understand human language, thereby facilitating a wide range of applications in areas such as chatbots, virtual assistants, sentiment analysis, and information retrieval.

NLP & Big Data – A Unique Relationship

The relationship between natural language processing (NLP) and big data is symbiotic, with each field leveraging the strengths of the other to extract valuable insights and drive innovation.

NLP techniques are essential for extracting, analyzing, and understanding the vast amounts of unstructured textual data generated in the era of big data. Businesses everywhere use NLP algorithms to uncover patterns, trends, and sentiments hidden within text data. This is what drives informed decision-making and predictive analytics.

But this is a two-way street. Big data infrastructure and technologies provide the scalability and computational power necessary to process and analyze large volumes of text data efficiently, which is what empowers NLP applications to scale and perform effectively in real-world scenarios.

Without one, the other has minimal utility. Together, natural language processing and big data applications are disrupting more traditional ways of working, from automotive to marketing to business intelligence and analytics.

Let’s look at the leading natural language processing NLP techniques now.

Top 14 NLP Modeling Techniques

Tokenization

Tokenization is one of the most essential and basic Natural Language Processing techniques. It is a vital step for processing text for an NLP application whereby you take a long-running text string and break it down into smaller units. Each unit is called a token, representing a word, symbol, number, etc. While there are many natural language processing methods, tokenization is one of the important ones.

These tokens in NLP techniques understand the context when developing an NLP model. As such, they are the building blocks of a model. Many tokenizers use a blank space as a separator to create tokens. Here are some of the tokenization techniques employed in natural language processing NLP, depending on your goal:

- White Space Tokenization – This technique divides text into tokens based on whitespace characters, such as spaces, tabs, or line breaks. Each consecutive sequence of characters between whitespace boundaries becomes a token.

- Rule-based Tokenization – Rule-based tokenization relies on predefined rules or patterns to segment text into tokens. These rules might include punctuation marks, hyphens, or specific character sequences.

- Spacy Tokenizer – Although not a technique but a popular NLP library, Spacy provides a tokenization tool as part of its preprocessing pipeline. Spacy’s tokenizer applies advanced linguistic rules to split text into tokens effectively.

- Dictionary-based Tokenization – It involves using a predefined dictionary or lexicon to identify tokens in text. Words or phrases present in the dictionary are recognized as tokens, while others may be treated as out-of-vocabulary (OOV).

- Subword Tokenization – It breaks down words into smaller subword units, such as character n-grams or morphemes. This approach is useful for handling out-of-vocabulary words and improving generalization in machine translation or text generation tasks.

- Penn Tree Tokenization – This is a specific tokenization scheme commonly used in the Penn Treebank dataset. It follows guidelines for tokenizing punctuation, contractions, and other linguistic constructs according to a predefined standard.

Stemming and Lemmatization

Stemming or lemmatization is the next most important NLP technique in the preprocessing phase. It refers to reducing a word to its word stem that attaches to a prefix or suffix. Lemmatization in NLP techniques refers to the text normalization technique whereby any kind of word is switched to its base root mode.

Search engines and chatbots use these two Natural Language Processing techniques to understand the meaning of a word. Both techniques aim to generate the root word of any word. While stemming focuses on removing the prefix or suffix of a word, lemmatization is more sophisticated in that it generates the root word through morphological analysis.

Machine Translation

Machine translation is the process of automatically translating text or speech from one language to another. It employs various techniques such as statistical machine translation, rule-based translation, and neural machine translation to generate accurate translations.

These systems analyze input text, break it down into smaller units, and then generate equivalent expressions in the target language. Data scientists employ machine translation for language localization, cross-lingual information retrieval, and global communication.

Stop Words Removal

Stop word removal is the next step in the preprocessing phase after stemming and lemmatization. Many words in a language serve as fillers; they don’t have a meaning of their own—for example, conjunctions like since, and, because, etc. Prepositions like in, at, on, above, etc., are also fillers.

There are many words where Natural Language Processing techniques do not have much of an impact. Such words don’t serve any significant purpose in an NLP model. However, it is not mandatory to stop word removal for every model. The decision depends on the kind of task. For example, when implementing text classification, stop-word removal is a helpful technique. But machine translation and text summarization do not require to stop word removal.

You can use various libraries like SpaCy, NLTK, and Gensim for word removal.

TF-IDF

TF-IDF is a statistical method used to show the importance of a given word for a document in a compendium of documents. To calculate the TF-IDF statistical measure, you multiply two distinct values (term frequency and inverse document frequency).

- Term Frequency (TF) – It is used to calculate the frequency of a word’s occurrence in a document. Use the following formula to calculate it:

TF (t, d) = count of t in d/ number of words in d

Words like “is,” “the,” and “will” usually have the highest frequency term frequency.

- Inverse Document Frequency (IDF) – Before explaining IDF, let’s understand Document Frequency first. Document Frequency calculates the presence of a word in a collection of documents.

IDF is the opposite of Document Frequency. It calculates the importance of a term in a corpus of documents. Words that are specific to a document will have high IDF.

The idea behind TF-IDF is to find prime words in a document by looking for words having a high frequency in one document but not the entire corpus documents. These words are usually specific to a discipline. For example, a document related to geography will have terms like topography, latitude, longitude, etc. But the same will not be true for a computer science document, which will likely have terms like data, processor, software, etc.

Dependency Parsing

This natural language processing technique analyzes the grammatical structure of a sentence to determine the relationships between words. It aims to identify the syntactic dependencies between words, such as subject-verb and object-verb relationships, to create a parse tree representing the hierarchical structure of the sentence.

Dependency parsing is crucial for tasks like information extraction, question answering, and syntactic analysis in various NLP applications.

Keyword Extraction

There are many popular and known Natural Language Processing techniques but keyword extraction stands out. People who read extensively intuitively develop a skimming-through skill. They skim through a text – be it a newspaper, a magazine, or a book – by skipping out the insignificant words while holding on to the ones that matter the most. Thus, they can extract the meaning of a text without much ado.

Keyword extraction as an NLP modeling technique does the same thing by finding the important words in a document. Therefore, keyword extraction is a text analysis technique that derives purposeful insights for any given topic. Thus, you don’t have to spend a lot of time reading through a document. You can simply use the keyword extraction technique to extract relevant keywords.

This technique is handy for NLP applications that wish to unearth customer feedback or identify the important points in any news item. There are two ways to do this:

- One is via TF-IDF, as discussed earlier. You can easily extract the top keyword using the highest TF-IDF.

- The second way to do keyword extraction is to use Gensim, an open-source Python library used for document indexing, topic modeling, etc. You can also use SpaCy and YAKE for keyword extraction.

Word Embeddings

While we are discussing Natural Language Processing techniques, we cannot omit word embeddings. An important question that confronts NLP data scientists is how to convert a body of text into numerical values that can be fed to machine learning and deep learning algorithms. Data scientists turn to word embeddings, also known as word vectors, to solve this issue.

Word embeddings refers to an approach whereby text and documents are represented using numeric vectors. It represents individual words as real-valued vectors in a lower-dimensional space. Similar words have similar representations.

In other words, it is a method that extracts the features of a text to enable us to input them into machine learning models. Hence, word embeddings are necessary for training a machine learning model.

n NLP methods, you can use predefined word embeddings or learn them from scratch for a dataset. Various word embeddings are available today, including GloVe, TF-IDF, Word2Vec, BERT, ELMO, CountVectorizer, etc.

Chunking

Chunking is an NLP text processing technique that involves identifying and grouping together adjacent words or tokens in a sentence that form meaningful units, such as noun phrases or verb phrases.

It relies on part-of-speech tagging and regular expression patterns to recognize and extract these chunks. Chunking is useful for tasks like named entity recognition, text summarization, and information extraction, where identifying meaningful phrases or segments of text is essential for further analysis or processing.

Sentiment Analysis

Sentiment analysis is an NLP technique used to contextualize a text to ascertain whether it is positive, negative, or neutral. It is also known as opinion mining and emotion AI. Businesses employ this NLP technique or NLP method to classify text and determine customer sentiment around their product or service. Most Natural Language Processing techniques are useful on social media.

It is also widely used by social media networks like Facebook and Twitter to curb hate speech and other objectionable content. It is safe to assume how beneficial these Natural Language Processing techniques can be.

Topic Modeling

A topic model in natural language processing refers to a statistical model used to pull abstract topics or hidden themes from a collection of multiple documents. It is an unsupervised machine-learning algorithm, which means it does not need training. Moreover, it makes it an easy and quick way to analyze data.

Companies use data modeling to identify one of the Natural Language Processing techniques of topics in customer reviews by finding recurring words and patterns. So, instead of spending hours sifting through tons of customer feedback data, you can use topic modeling to decipher the most essential topics quickly. This enables businesses to provide better customer service and improve their brand reputation.

Speech Recognition

Speech recognition is also known as Automatic Speech Recognition (ASR). It is the process of converting spoken language into text. As such, it involves analyzing audio recordings of speech and transcribing them into written text.

Speech recognition systems use various techniques, such as acoustic modeling, language modeling, and statistical methods to accurately recognize and transcribe spoken words. These systems have applications in voice-controlled assistants, dictation software, voice search, and hands-free computing, making them integral to modern communication and interaction technologies.

Text Summarization

The text summarization technique of NLP is used to summarize a text and make it more concise while maintaining its coherence and fluency. It enables you to extract important information from a document without having to read every word of it. In other words, this automatic summarization saves you a lot of time. This is one of the most fascinating Natural Language Processing techniques.

There are two text summarization techniques.

- Extraction-based summarization – This Natural Language Processing technique does not entail making any changes to the original text. Instead, it just extracts some keywords and phrases from the document.

- Abstraction-based summarization – This summarization technique creates new phrases and sentences from the original document that depict the most important information. It paraphrases the original document, thus changing the structure of sentences. Moreover, it also helps manage the grammatical errors or inconsistencies associated with the extraction-based summarization technique. Having said that, you can look towards an online summarizer to automate both these types of summarization processes.

Named Entity Recognition

Another one of the fascinating Natural Language Processing techniques is NER. Named Entity Recognition (NER) is a subfield of information extraction that manages the location and classification of named entities in an unstructured text and turns them into predefined categories. These categories include names of persons, dates, events, locations, etc.

NER is, by and large, much like keyword extraction, except that it puts extracted keywords in predefined categories. So, you can consider NER an extension of keyword extraction in that it takes it one step ahead. SpaCy offers built-in capabilities to carry out NER.

Conclusion

NLP techniques or NLP methods, like tokenization, stemming, lemmatization, and stop word removal, are used in all-natural language processing applications. These Natural Language Processing techniques fall under the domain of preprocessing. Similarly, keyword extraction, TF-IDF, and text summarization are helpful when analyzing texts. But these Natural Language Processing techniques also serve as the cornerstone of NLP model training and Natural Language Processing techniques.

If you want to deploy an NLP application, contact us at [email protected].

FAQs

Future NLP techniques may involve advanced transformer architectures, enhanced pre-training methods, and improved context-aware models.

Yes, NLP is valuable for data science, enabling the extraction of insights from unstructured text data, sentiment analysis, and language understanding.

Yes, ChatGPT is a natural language processing (NLP) model developed by OpenAI.

The three pillars of NLP are syntax (structure of language), semantics (meaning of words and sentences), and pragmatics (language use in context).

The future of NLP in data science involves more sophisticated language models, seamless integration with analytics tools, and broader applications in understanding and generating human-like text.