Domain-specific technologies characterize the modern era. Organizations, in order to leverage the best of what our times offer, prefer to use a diverse array of tools and technologies to fuel business growth. Companies use a synergy of several database platforms to design, optimize, and automate transactional data storage. However, having multiple database platforms within an organization often becomes a hassle when you need the data in one place to perform data analytics and reporting. This is where the server-to-server data migration utility in Python helps.

Introduction to Server-To-Server Data Migration

Server-to-server data migration is a Python utility designed to automate the migration of data residing in multiple database platforms on different servers via intermediate mediums like FTP or emails in the format of Parquet files. You can use this capability to load all the necessary data into one database platform, which you can use to set up a reporting dashboard or an OLAP cube.

The feature supports data transfer between the database platforms mentioned below:

- MySQL

- Microsoft SQL

- Oracle SQL

- PostgreSQL

- Greenplum, etc.

Architecture

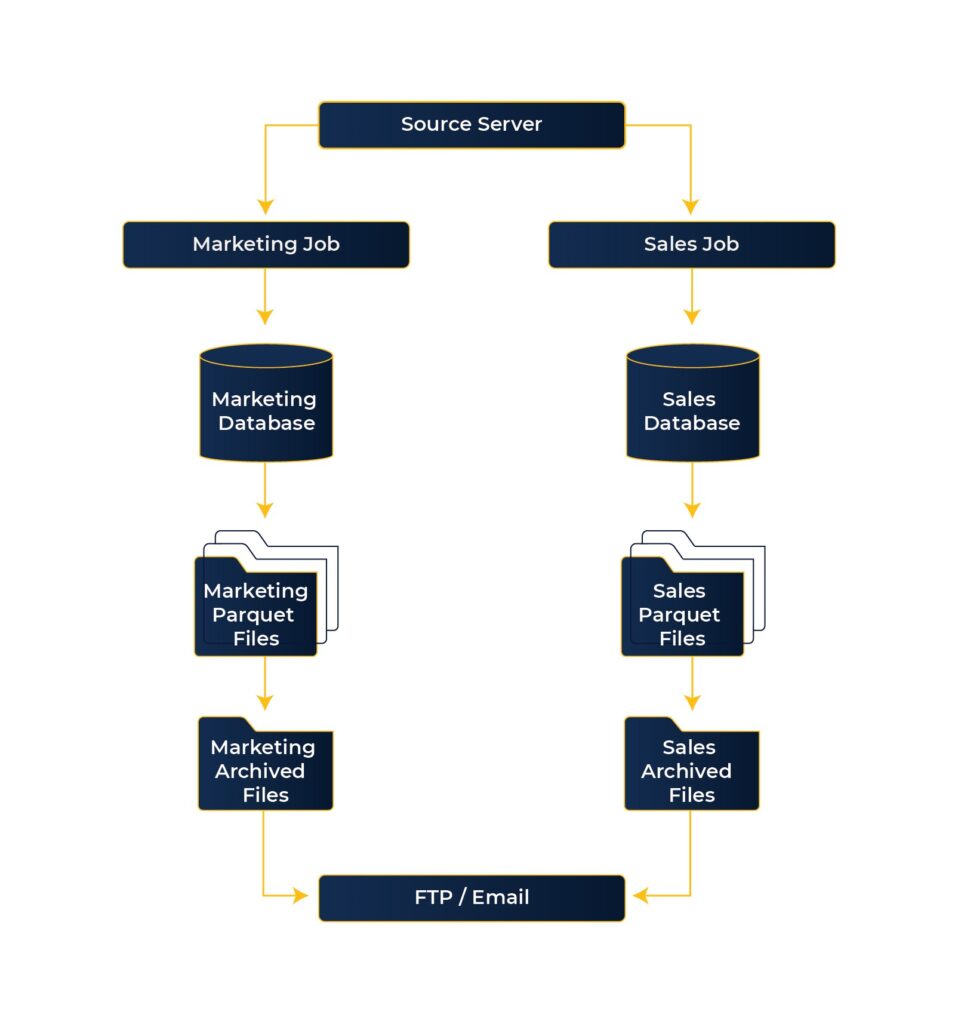

Let’s dive deep into the details of how this utility extracts, migrates, and loads the data between different servers. The jobs configured on the source server for different modules perform the below-mentioned steps for data migration:

- Extract the data from source databases based on the configurations provided.

- Save the data in Parquet format files.

- Archive the Parquet files.

- Upload the archived files on an FTP host or an SMPT host.

The diagram below illustrates the data movement flow from a source server to an FTP/SMTP host.

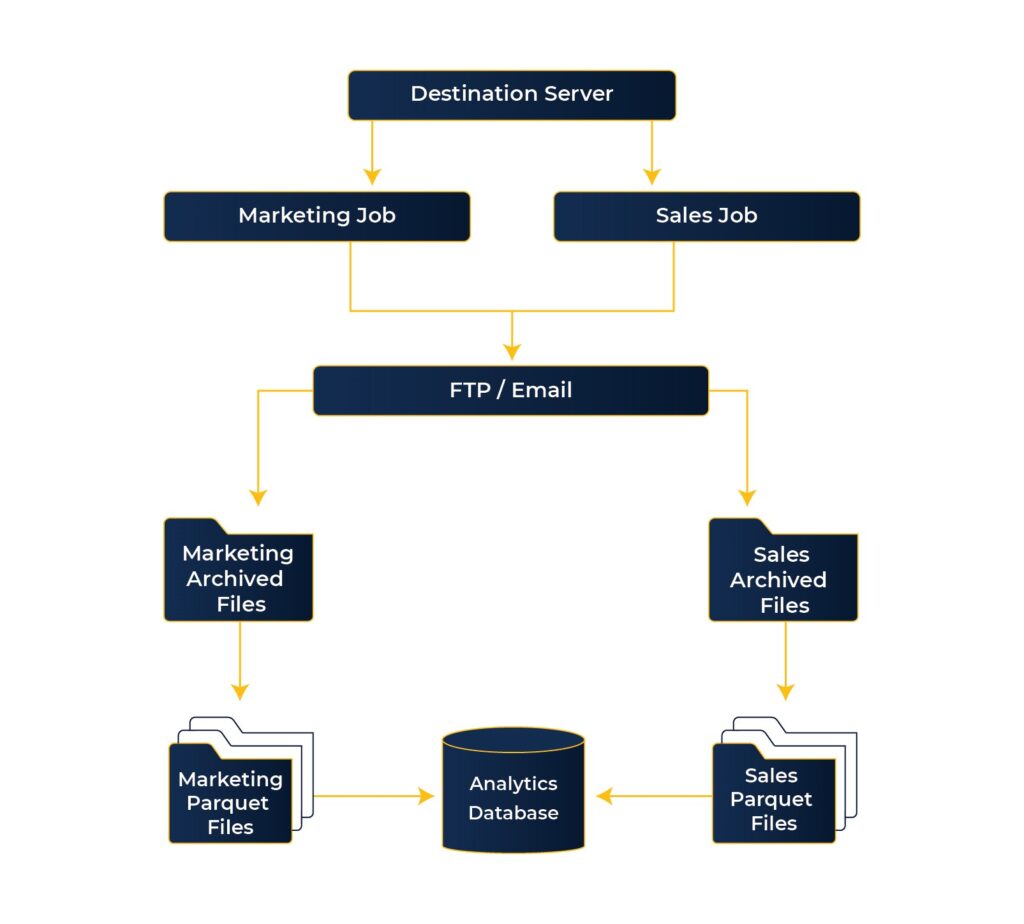

Destination server jobs perform exactly the reverse of the flow mentioned above.

- Download the archived files from FTP.

- Unarchive the files to individual Parquet files.

- Read the Parquet files.

- Dump the data in the destination server databases.

The diagram below illustrates the data movement flow from an FTP/SMTP host to the destination server.

How Secure is this Data Transfer?

Companies need to be confident that they have strong data security and can protect against data loss, unauthorized access, and data breaches. Poor data security often results in critical business information being lost or stolen. Not only this, but it also leads to mediocre customer experience, eventually damaging your business reputation and causing you a loss.

This server-to-serve data migration strategies Python capability utilizes Parquet files to ensure data security and integrity. A modular encryption mechanism that encrypts and authenticates the file data and metadata protects the Parquet files containing sensitive information.

When writing a Parquet file, a random data encryption key (DEK) is generated for each encrypted column and the footer. These keys are used to encrypt the data and the metadata modules in the Parquet file.

Utility Setup

The steps for setting this utility up are pretty straightforward. Here’s what you need to do:

- Download the utility setup.

- Update the database and file configurations.

- Schedule the utility on the desired frequency.

Conclusion

Server-to-server migration between multiple database platforms has become the need of many organizations utilizing their data to improve their businesses. However, these data migrations between servers are often tricky and time-consuming.

But you need not worry. This utility helps you execute data migrations and SharePoint migration efficiently while saving you a lot of time and repetitive efforts.

If you need to set up the server-to-server data migration utility or require support from Xavor to update the utility for your specific use case, please feel free to contact us at [email protected]. Xavor has a highly qualified data team that constantly works on developing and maintaining such utilities to address the modern data needs of our clients.